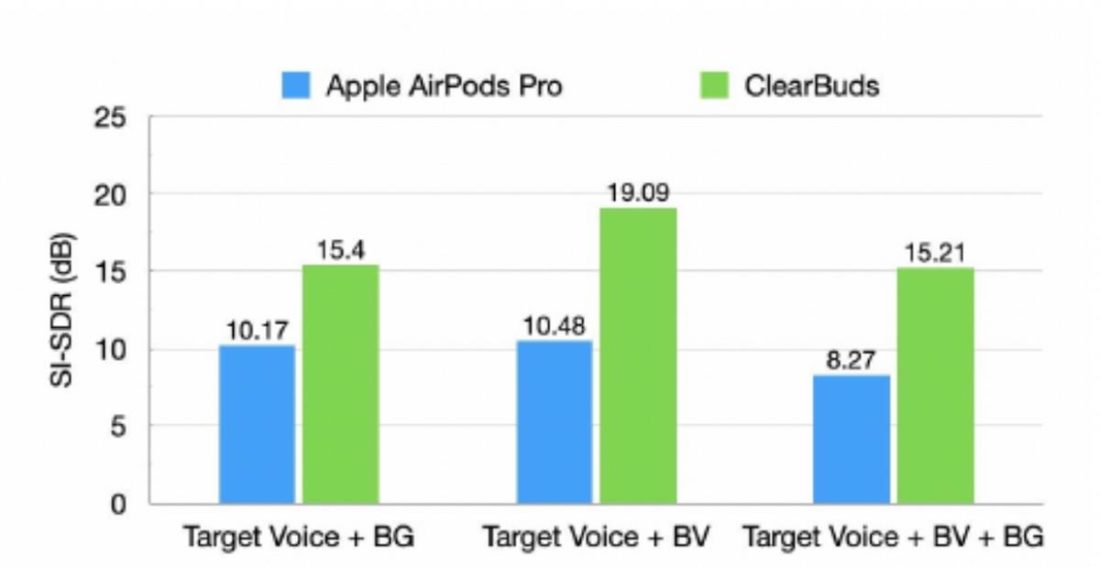

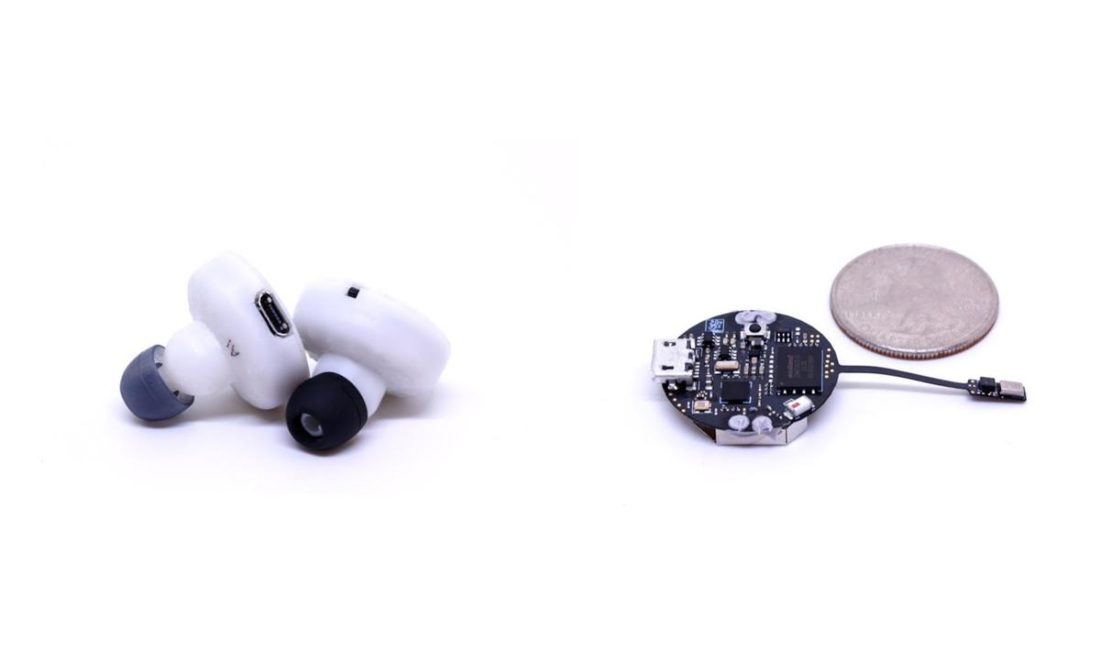

Developed during MobiSys ’22: The 20th Annual International Conference on Mobile Systems, Applications and Services, the ClearBuds take wireless earbuds to the next level as they offer high-quality speech enhancement geared for taking calls by utilizing a neural network between the earbuds, the mic, and your audio source. Clearbuds are wireless binaural earbuds that take a stream of audio from both sides of the earbuds, isolate and enhance the speaker’s voice, and remove background noise in real-time. They function through a neural network that was developed to run on an iOS device just by turning on the noise suppression button available on the accompanying software. In the paper “ClearBuds: Wireless Binaural Earbuds for Learning-based Speech Enhancement,” the researchers outlined their two key technical contributions – the combination of a binaural microphone array and lightweight neural network – as well as its performance abilities: synchronization error of less than 64 microseconds and network runtime of 21.4 ms on a paired mobile phone. These wireless earbuds’ noise suppression function was also tested against the Apple AirPods Pro, which it outperformed in three tasks: separating the target voice from the general background noise (i.e. traffic), background voices, and a combination of both. This project was developed by roommates and fellow University of Washington researchers Ishan Chatterjee, Maruchi Kim, and Vivek Jayaram, along with Shyam Gollakota, Ira Kemelmacher, Shwetak Patel, and Steven Seitz.

How ClearBuds Enhance a Speaker’s Voice

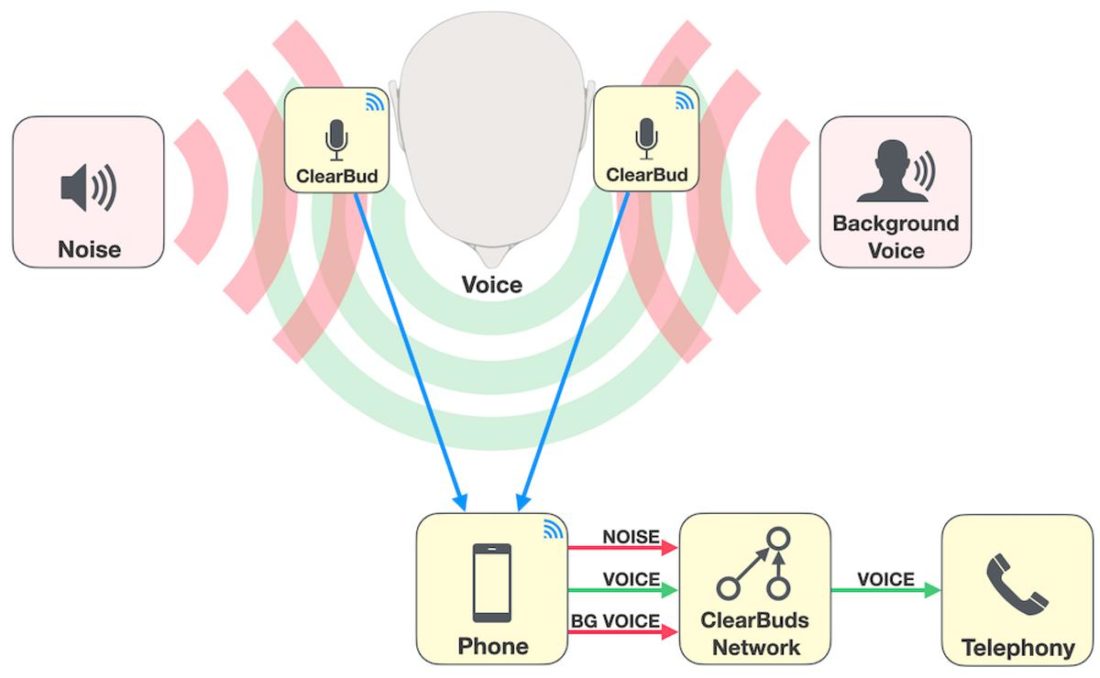

The wireless earbuds were designed to be capable of operating as a synchronized binaural microphone array. The currently available earbuds on the market simply use the mic’s directionality or polar pattern to determine which direction to take the sound from. This is usually done by up to 6 microphones, all assigned specific tasks and set up to work as one microphone system. This means the 6 microphones will only send one audio stream to the device you’re connected to. In an interview with UW News, the researchers explained that by using two separate microphone systems in the earbuds, audio is received “close by and approximately equidistant from the two earbuds.” Because of this, it’s easier to focus on the speaker’s voice, which comes from just these two directions and filter out sounds – like background noise and other voices – coming elsewhere. Aside from the mic placements, the ClearBuds take speech-enhancement to the next level by using a lightweight neural network. After the binaural input from the wearable earbuds is received, this neural network, which can run on a mobile phone as an app, processes the audio streams to enhance the speaker’s voice. This neural network removes background noise in real-time by analyzing the data, suppressing any non-voice sound – such as traffic, the sounds of your roommate vacuuming, or any other voice, and finally separating and amplifying the speaker’s voice coming in from the microphones in each bud.

When to Expect Clearer Calls in the Future With ClearBuds

ClearBuds operate through a custom wireless audio protocol that allows two microphones in different earbuds to stream to a single mobile phone. While commercially-available earbuds still don’t have the technology to switch from streaming from a single microphone system at a time, the researchers are hopeful it can happen in the future as Bluetooth 5.2 introduces Multi-Stream Audio and Audio Broadcast. It must also be noted that the noise suppression function currently only works when both earbuds are worn. Members of the team continue to work on the systems developed through these wireless earbuds, including further research into neural network algorithms. But they have also noted that the real-time communication system developed through this device can be adopted in future research among mobile system and machine learning researchers. Enhancing audio quality in calls or telephony in noisy environments can prove beneficial for smart-home speakers, tracking robot locations, or search and rescue missions where accuracy in hearing audio is vital.